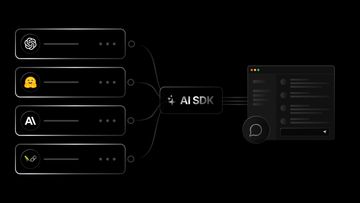

How I Tested Streaming Responses with Vercel AI SDK

TL;DR

I figured out how to unit test streaming AI responses using the Vercel AI SDK, including mocking AI responses, handling malformed data and testing user interruptions.

You’ll see me struggling with:

- Mocking streaming responses correctly

- Testing client-side

useCompletionbehavior - Simulating user interruptions

- Testing server-side completions

If you’re building with the AI SDK and wondering “how do I test this?”, this is the post for you!

Why I Wrote This

I knew the ai-sdk from Vercel a while ago but never had the chance to work on it. I’ve always been curious how AI streaming works and… how can I test it?

Unfortunately, testing with AI SDK isn’t very well documented. The official docs only provided a few helper methods, without showing how to actually use them.

Before diving deeper, I googled, I read the docs, I even asked ChatGPT. Still can’t find anything about writing unit tests with the AI SDK.

So I decided to write one, hopefully this helps someone in the future.

Mocking AI Streaming in Client Side

After working on this for a few days, I figured out the basics of AI tools. I built a UI using the SDK’s useCompletion hook:

const { isLoading, stop, completion, error, setInput, handleSubmit } = useCompletion({

api: '/api/completion',

initialInput: ...

});And the AI response comes in as a ReadableStream (See MDN docs).

After I got it working, a question popped up in my mind: Wait, how can I test this? The data comes back unpredictably, the stream is non-deterministic and I’ve never touched ReadableStream in my life!

I turned to msw, which I remembered it supports ReadableStream and I am familiar with it.

My First Attempt

At first, I tried using msw’s own example with a raw ReadableStream, hopefully it’ll work. (Spoiler: it didn’t)

http.post('/api/completion', () => {

const stream = new ReadableStream({

start(controller) {

controller.enqueue(new TextEncoder().encode('hello'))

controller.enqueue(new TextEncoder().encode('world'))

controller.close()

},

})

return new HttpResponse(stream, {

headers: {

'content-type': 'text/plain',

},

})

})I hit all kinds of weird errors like Error: Failed to parse stream string. No separator found. coming from Vercel SDK itself, which seems to be validating the schema with zod.

I asked ChatGPT, but I couldn’t find anything useful… Is it even possible to test this?

Eventually, I found this GitHub thread: How do I create a stream from scratch?. Boom. There’s a way to mock ReadableStream in a correct format accepted by the ai-sdk - simulateReadableStream!

Test Basic Flow

Here’s how I mocked a full AI response using msw and simulateReadableStream:

const server = setupServer(

http.post('/api/completion', async () => {

// https://ai-sdk.dev/docs/ai-sdk-core/testing

// `simulateReadableStream` formats chunks in a Vercel-compatible format like: `0:"text"\n`, `d:{...}`

const stream = simulateReadableStream({

initialDelayInMs: 1,

chunkDelayInMs: 5,

chunks: [

`0:"This"\n`,

`0:" is an "\n`,

`0:"example. "\n`,

`e:{"finishReason":"stop","usage":{"promptTokens":20,"completionTokens":50},"isContinued":false}\n`,

`d:{"finishReason":"stop","usage":{"promptTokens":20,"completionTokens":50}}\n`,

],

}).pipeThrough(new TextEncoderStream());

return new HttpResponse(stream, {

status: 200,

headers: {

'X-Vercel-AI-Data-Stream': 'v1',

'Content-Type': 'text/plain; charset=utf-8',

},

});

}),

);Then in the test:

const askButton = screen.getByRole("button", { name: "Ask" });

await user.click(askButton);

expect(await screen.findByText("Loading...")).toBeInTheDocument();

expect(await screen.findByText("This is an example.")).toBeInTheDocument();All green. ✅

Test User Interruptions

To support user cancellation, I added a “Stop” button to my UI:

const { isLoading, stop, completion, error, setInput, handleSubmit } = useCompletion({

api: '/api/completion',

initialInput: ...

});

<Button onClick={stop} disabled={!isLoading}>Stop</Button>Since simulateReadableStream supports a chunkDelayInMs option, I thought I could simulate user interruptions by combining it with jest.useFakeTimers() and msw. (Spoiler: I couldn’t)

This was my setup:

http.post('/api/completion', async () => {

const stream = simulateReadableStream({

chunkDelayInMs: 200,

chunks: [

`0:"First "\n`,

`0:"second "\n`,

`0:"third "\n`,

`0:"fourth "\n`,

`e:{"finishReason":"stop","usage":{"promptTokens":20,"completionTokens":50},"isContinued":false}\n`,

`d:{"finishReason":"stop","usage":{"promptTokens":20,"completionTokens":50}}\n`,

],

}).pipeThrough(new TextEncoderStream());

return new HttpResponse(stream, {

status: 200,

headers: {

'X-Vercel-AI-Data-Stream': 'v1',

'Content-Type': 'text/plain; charset=utf-8',

},

});

}),Then, I thought I could use jest.useFakeTimers() in the test:

jest.useFakeTimers();

...

const askButton = screen.getByRole("button", { name: "Ask" });

await user.click(askButton);

expect(await screen.findByText("Loading...")).toBeInTheDocument();

act(() => {

jest.advanceTimersByTime(200);

})

expect(await screen.findByText("First")).toBeInTheDocument();

const stopButton = screen.getByRole("button", { name: "Stop" });

await user.click(stopButton);

jest.advanceTimersByTime(1000);

await waitFor(() => {

expect(screen.queryByText("third")).not.toBeInTheDocument();

});

expect(await screen.findByTestId("completion")).toHaveTextContent('First');It passed, hurray! But when I removed the stop() function on purpose, it still passed. Oh… false positive.

Why the Test Doesn’t Work

In the previous test, I used:

expect(await screen.findByTestId("completion")).toHaveTextContent('First');The assertion wasn’t strict enough. It would still pass even if the actual DOM contained the full response like First second third fourth.

I also noticed that even after pressing the “Stop” button, the entire response continued streaming. When I logged the AbortSignal inside msw mock, I saw signal.aborted remained false.

Turns out, msw doesn’t support aborting a stream in progress. (mswjs/msw#2104)

After digging through documentation and a few rounds with ChatGPT, I nearly gave up, until ChatGPT suggested a workaround.

Oh yes, I can spy on AbortController, since useCompletion internally uses AbortController for cancellation! (see source)

Final Working Test

This is the final version:

const abortSpy = jest.spyOn(AbortController.prototype, 'abort');

const { user } = renderComponent();

const askButton = screen.getByRole("button", { name: "Ask" });

expect(screen.getByRole("button", { name: "Ask" })).toBeInTheDocument();

await user.click(askButton);

expect(await screen.findByText("Loading...")).toBeInTheDocument();

expect(abortSpy).not.toHaveBeenCalled();

await waitFor(() => {

expect(screen.queryByText("Loading...")).not.toBeInTheDocument();

});

const stopButton = screen.getByRole("button", { name: "Stop" });

await user.click(stopButton);

expect(abortSpy).toHaveBeenCalledTimes(1);This test doesn’t verify that the stream output actually stops in the UI, since msw still returns the full response, but it does fail if the “Stop” button is disconnected from the SDK.

This approach isn’t perfect, but it’s enough to catch real regressions for now.

Testing Malformed Responses

Now let’s try a broken response!

http.post('/api/completion', () => {

const stream = simulateReadableStream({

chunks: [

`0:"First part "\n`,

`bad data\n`,

`0:"Second part"\n`,

`d:{"finishReason":"stop"}\n`,

],

}).pipeThrough(new TextEncoderStream());

return new HttpResponse(stream, {

status: 200,

headers: {

'X-Vercel-AI-Data-Stream': 'v1',

'Content-Type': 'text/plain; charset=utf-8',

},

});

})Then in the test:

const askButton = screen.getByRole("button", { name: "Ask" });

await user.click(askButton);

expect(await screen.findByText("First part")).toBeInTheDocument();

const errorText = await screen.findByText("Sorry, something went wrong.");

expect(errorText).toBeInTheDocument();

expect(screen.queryByText("Second part")).not.toBeInTheDocument();You can see it shows the first chunk and the error state in the test. ✅

Mocking Responses on the Server Side

I also had a server-side route to test. My /api/completion endpoint uses Groq’s model like this:

const result = streamText({

model: groq('llama-3.1-8b-instant'),

prompt: `Suggest one movie...`,

});

...

return result.toDataStreamResponse();For testing, I set up a mock just like before, but for the Groq API endpoint: https://api.groq.com/openai/v1/chat/completions.

const server = setupServer(

http.post('https://api.groq.com/openai/v1/chat/completions', () => {

const rawStream = simulateReadableStream({

chunks: [

`0:"Hello"\n`,

`0:" world!"\n`,

`d:{"finishReason":"stop"}\n`,

],

});

const encodedStream = rawStream.pipeThrough(new TextEncoderStream());

return new HttpResponse(encodedStream, {

status: 200,

headers: {

'Content-Type': 'text/plain; charset=utf-8',

'X-Vercel-AI-Data-Stream': 'v1',

},

});

}),

);Then I wrote a test:

it('should return a successful response', async () => {

const mockRequest = new Request('http://localhost/api/completion', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({

prompt: JSON.stringify({

genre: "Action",

hour: "2"

})

})

});

const response = await POST(mockRequest);

expect(response.status).toEqual(200);

expect(response.body).toBeInstanceOf(ReadableStream);

});Unfortunately, this approach is incomplete, it only checks if the response is ReadableStream.

I had a hard time figuring out how to test the actual contents inside, since there’re some subtle differences between ReadableStream in the browser vs Node.js.

Even with polyfills, I got errors like:

TypeError: The "transform.writable" property must be an instance of WritableStream. Received an instance of WritableStreamTypeError: The "transform.readable" property must be an instance of ReadableStream.Error: First parameter has member 'readable' that is not a ReadableStream.If you’ve solved this, reach out and let me know!

Final Thoughts

As a frontend engineer, I don’t get to work with ReadableStream often. Thanks to ai-sdk, I had a real use case and I was finally able to test streaming AI responses.

It felt amazing to test something I thought I’d never figure out. Hopefully this post helps someone else!

If you have other testing tips for AI apps, I’d love to hear them too :)

Useful Links

- Repo - You can find more tests there.

- Vercel AI SDK Docs